Later, the agent will switch to scissors, and eventually back to rock, creating a cycle. As self-play progresses, a new agent will then choose to switch to paper, as it wins against rock. For example, in the game rock-paper-scissors, an agent may currently prefer to play rock over other options. Forgetting can create a cycle of an agent “chasing its tail”, and never converging or making real progress.

#E x starcraft ii how to#

The most salient one is forgetting: an agent playing against itself may keep improving, but it also may forget how to win against a previous version of itself.

#E x starcraft ii professional#

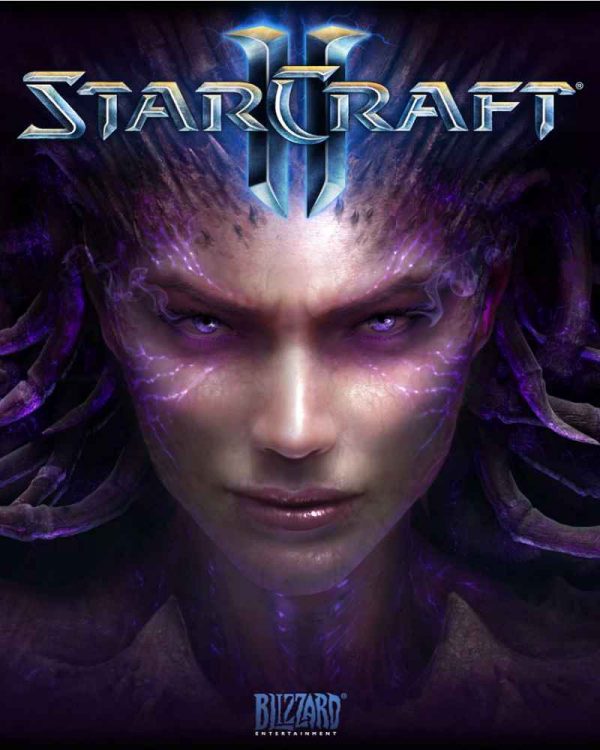

Dario "TLO" Wünsch, Professional StarCraft II playerĭespite its successes, self-play suffers from well known drawbacks. Overall, it feels very fair – like it is playing a ‘real’ game of StarCraft. And while AlphaStar has excellent and precise control, it doesn’t feel superhuman – certainly not on a level that a human couldn’t theoretically achieve. I’ve found AlphaStar’s gameplay incredibly impressive – the system is very skilled at assessing its strategic position, and knows exactly when to engage or disengage with its opponent. Games like StarCraft are an excellent training ground to advance these approaches, as players must use limited information to make dynamic and difficult decisions that have ramifications on multiple levels and timescales. For example, AlphaGo and AlphaZero established that it was possible for a system to learn to achieve superhuman performance at Go, chess, and shogi, and OpenAI Five and DeepMind’s FTW demonstrated the power of self-play in the modern games of Dota 2 and Quake III.Īt DeepMind, we’re interested in understanding the potential – and limitations – of open-ended learning, which enables us to develop robust and flexible agents that can cope with complex, real-world domains. Many advances since then have demonstrated that these approaches can be scaled to progressively challenging domains. When combined, the notions of learning-based systems and self-play provide a powerful paradigm of open-ended learning. Its developers used the notion of self-play to make the system more robust: by playing against versions of itself, the system grew increasingly proficient at the game. Instead of playing according to hard-coded rules or heuristics, TD-Gammon was designed to use reinforcement learning to figure out, through trial-and-error, how to play the game in a way that maximises its probability of winning. In 1992, researchers at IBM developed TD-Gammon, combining a learning-based system with a neural network to play the game of backgammon. Learning-based systems and self-play are elegant research concepts which have facilitated remarkable advances in artificial intelligence. We expect these methods could be applied to many other domains. Using the advances described in our Nature paper, AlphaStar was ranked above 99.8% of active players on, and achieved a Grandmaster level for all three StarCraft II races: Protoss, Terran, and Zerg. We chose to use general-purpose machine learning techniques – including neural networks, self-play via reinforcement learning, multi-agent learning, and imitation learning – to learn directly from game data with general purpose techniques.

AlphaStar now has the same kind of constraints that humans play under – including viewing the world through a camera, and stronger limits on the frequency of its actions* (in collaboration with StarCraft professional Dario “TLO” Wünsch).Our new research differs from prior work in several key regards:

#E x starcraft ii full#

Since then, we have taken on a much greater challenge: playing the full game at a Grandmaster level under professionally approved conditions. This January, a preliminary version of AlphaStar challenged two of the world's top players in StarCraft II, one of the most enduring and popular real-time strategy video games of all time. TL DR: AlphaStar is the first AI to reach the top league of a widely popular esport without any game restrictions.

0 kommentar(er)

0 kommentar(er)